KEMU: A Declarative Approach to Emulating Kubernetes Clusters at Scale

Optimizing scheduling efficiency for AI workloads requires extensive experimentation and observation due to constrained supply and high costs of datacenter GPUs. Maximizing infrastructure efficiency through optimized scheduling and high-density bin packing is critical for achieving high workload throughput, resource utilization, and cost efficiency. Introducing scheduler modifications at production scale is risky: configuration errors can render workloads unschedulable, causing multi-day delays for dozens or hundreds of ML engineers, idling capacity, wasted on-demand resources, and delayed delivery. It is imperative that scheduler modifications with a big blast radius are tested and verified in a safe environment before shipping to production.

This problem is not new, and virtual/emulated clusters for scheduling experimentation and workload right-sizing have been around for a long time. Emulated clusters provide a limited real cluster experience for the fraction of the price and compute resources required to run them. To understand what an effective emulation solution needs, let’s first establish the requirements based on a typical production GPU cluster setup.

Requirements #

Let’s consider the following cluster setup to provide background for the functionality of the emulated cluster:

- A Kubernetes cluster with 1,000+ GPU nodes of different types;

- The nodes are spread across multiple topology domains (availability zones, racks);

- Specialized scheduling and training operators are running on the cluster;

- Observability is provided via the Prometheus stack.

Based on the provided example, we can derive the following high-level requirements for the emulated cluster:

- Large cluster emulation using few resources (e.g., it can be run on a laptop or as a part of a CI job);

- Ability to execute workloads and schedule pods on emulated nodes;

- Configurability of cluster shape and size: number of nodes, instance types, node resources, and placement;

- Ability to install, configure, and run unemulated applications such as Kubernetes operators and observability stack;

- Reproducibility of emulated clusters;

- Automation for cluster lifecycle management.

There exist well-known technologies and techniques that address multiple (but not all) requirements from the list. The next section provides a brief overview.

Prior art #

The most frequently used solution for the given set of requirements is a combination of a Kind cluster with emulated KWOK nodes. In such setups, Kind cluster is used for running the Kubernetes control plane components and, potentially, a data plane while KWOK is managing emulated data plane and pod lifecycle. Each technology alone doesn’t solve the problem in full: Kind provides a fully conformant Kubernetes control plane and data plane in Docker but doesn’t scale well, whereas KWOK scales to thousands of nodes but doesn’t easily allow running real workloads (such as schedulers and operators) due to the fully emulated data plane.

There exist other technologies in the emulation domain which are less applicable to this problem but are worth mentioning:

- SimKube: a record-and-replay simulation environment for Kubernetes based on KWOK;

- Minikube: similar to Kind but runs Kubernetes control plane in a VM instead of Docker;

- Virtual Kubelet: an open-source Kubernetes kubelet implementation that masquerades as a kubelet. Can be used for implementing a highly customizable emulation of Kubelet behavior;

- kubemark: a performance testing tool which allows users to run experiments on simulated clusters.

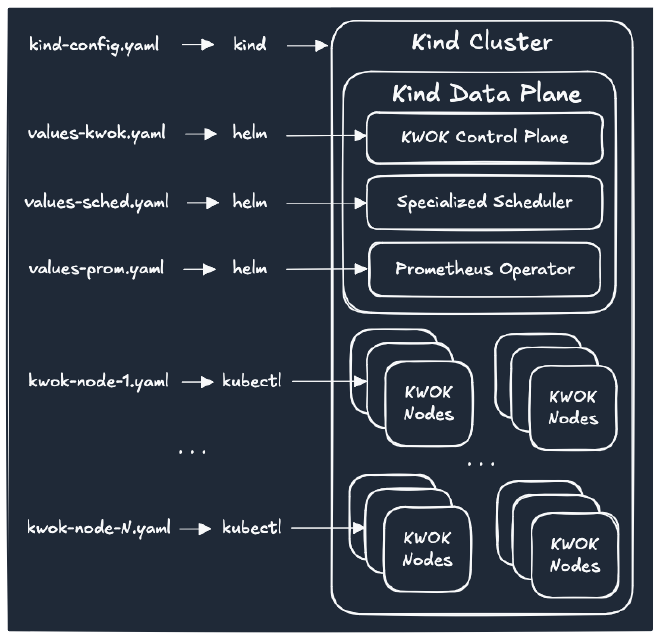

Once the control plane and worker nodes are ready, required dependencies/addons can be installed

using Helm Charts, kustomize, or raw Kubernetes manifests using helm or kubectl CLIs to provide

desired component set for functionality under test and observability.

The main problem with this approach is in configurability and automation of the cluster bootstrap.

Every component used in the target setup is versioned and might require custom configuration

(for example, Helm values file). Kind and KWOK might require custom configuration themselves too.

The diagram below demonstrates an example of such a cluster bootstrap process:

With a small number of components and infrequently changing control plane

setup, the whole process could be automated with Makefile, helper scripts, and pre-defined

configuration files. However, when it comes to the emulated data plane configuration and management,

the automation becomes quite involved and might require excessive templating. A task of creating

a thousand emulated nodes with varying capacity and different placement options depending

on the desired cluster shape, and in a reproducible manner, can quickly become overwhelming

using scripting and templating.

For example, creating a 1,000-node cluster with 3 instance types across 3 availability zones using KWOK manifests requires:

- 1,000 individual node YAML files or multiple application of predefined templates(reference);

- Custom scripts to manage naming, labeling, and placement;

- Manual coordination of Kind, KWOK, and Helm installations;

- Significant effort to modify cluster shape or rerun experiments.

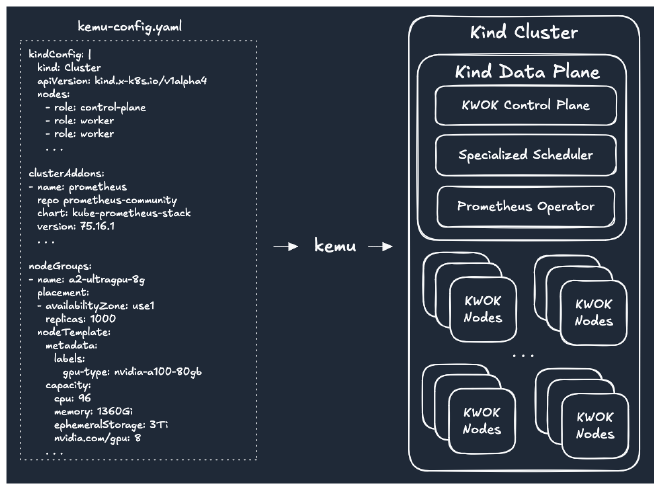

Automating this with Makefiles and shell scripts is fragile and difficult to maintain as cluster requirements evolve. Making this process repeatable, and clusters easily reconfigurable requires a programmatic solution driven by declarative configuration. KEMU (Kubernetes Emulator Utility) is a tool that was designed to eliminate this complexity entirely: one declarative specification replaces multiple node manifests and templates, coordination scripts, and fragmented configuration files.

Introducing KEMU - A Declarative Kubernetes Emulator Utility #

KEMU provides a single-spec declarative approach for configuring control plane nodes, installing cluster addons, and defining emulated cluster nodes with various capacity and placement options.

KEMU builds on Kind for control plane and worker node deployment, which is used for running auxiliary software required for experimentation. Examples include Prometheus Operator for observability, custom schedulers (Volcano, YuniKorn), workload management operators (Kueue, KubeRay), etc. Running these components requires actual Kubelet(s) to be available for scheduling, and Kind provides sufficient functionality for this.

The Kubelet emulation is based on KWOK, and KEMU provides a lightweight configuration

scheme for defining node groups with various properties, and generates specified

number of nodes automatically.

Creating a cluster with KEMU is as easy as invoking the utility and providing it with a path or a URL to the cluster configuration file:

go install github.com/datastrophic/kemu

kemu create-cluster --kubeconfig $(pwd)/kemu.config --cluster-config https://raw.githubusercontent.com/datastrophic/kemu/refs/tags/v0.1.0/examples/gcp-small.yaml

The cluster is accessible with standard Kubernetes tools and SDKs using the kubeconfig file:

export KUBECONFIG=$(pwd)/kemu.config

kubectl get nodes

# Example output:

# NAME STATUS ROLES AGE VERSION

# a2-ultragpu-8g-use1-0 Ready agent 99s v1.33.1

# a2-ultragpu-8g-use1-1 Ready agent 99s v1.33.1

# a2-ultragpu-8g-use1-2 Ready agent 99s v1.33.1

# a2-ultragpu-8g-use1-3 Ready agent 99s v1.33.1

# a2-ultragpu-8g-use1-4 Ready agent 99s v1.33.1

# a2-ultragpu-8g-use2-0 Ready agent 99s v1.33.1

# a2-ultragpu-8g-use2-1 Ready agent 99s v1.33.1

# a2-ultragpu-8g-use2-2 Ready agent 99s v1.33.1

# a2-ultragpu-8g-use2-3 Ready agent 99s v1.33.1

# a2-ultragpu-8g-use2-4 Ready agent 99s v1.33.1

# a2-ultragpu-8g-use3-0 Ready agent 99s v1.33.1

# a2-ultragpu-8g-use3-1 Ready agent 99s v1.33.1

# a2-ultragpu-8g-use3-2 Ready agent 99s v1.33.1

# a2-ultragpu-8g-use3-3 Ready agent 99s v1.33.1

# a2-ultragpu-8g-use3-4 Ready agent 98s v1.33.1

# a3-highgpu-8g-use1-0 Ready agent 98s v1.33.1

# a3-highgpu-8g-use1-1 Ready agent 98s v1.33.1

# a3-highgpu-8g-use1-2 Ready agent 98s v1.33.1

# a3-highgpu-8g-use1-3 Ready agent 98s v1.33.1

# a3-highgpu-8g-use1-4 Ready agent 97s v1.33.1

# a3-highgpu-8g-use2-0 Ready agent 97s v1.33.1

# a3-highgpu-8g-use2-1 Ready agent 97s v1.33.1

# a3-highgpu-8g-use2-2 Ready agent 97s v1.33.1

# a3-highgpu-8g-use2-3 Ready agent 97s v1.33.1

# a3-highgpu-8g-use2-4 Ready agent 96s v1.33.1

# a3-ultragpu-8g-use1-0 Ready agent 96s v1.33.1

# a3-ultragpu-8g-use1-1 Ready agent 96s v1.33.1

# a3-ultragpu-8g-use1-2 Ready agent 96s v1.33.1

# a3-ultragpu-8g-use1-3 Ready agent 96s v1.33.1

# a3-ultragpu-8g-use1-4 Ready agent 95s v1.33.1

# kwok-control-plane Ready control-plane 7m58s v1.33.1

Cluster Specification #

The following example demonstrates key components of the KEMU cluster specification. The specification contains 3 main sections:

kindConfig- a YAML configuration file used for creating Kind Cluster. This is a standard Kind Configuration which is passed to the Kind cluster provisioner without any modifications.clusterAddonsdefine a list of Helm Charts to be installed as a part of cluster bootstrap process. Each cluster addon can be parameterized withvaluesObjectcontaining Helm Chart values for the installation.nodeGroupsdefine emulated node groups sharing similar properties (instance type, capacity) and the placement of the nodes. Node placement allows configuring number of nodes in different availability zones.

Example specification:

apiVersion: kemu.datastrophic.io/v1alpha1

kind: ClusterConfig

spec:

kindConfig: |

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

- role: worker

clusterAddons:

- name: prometheus

repoName: prometheus-community

repoURL: https://prometheus-community.github.io/helm-charts

namespace: monitoring

chart: prometheus-community/kube-prometheus-stack

version: 75.16.1

valuesObject: |

alertmanager:

enabled: false

nodeGroups:

- name: a2-ultragpu-8g

placement:

- availabilityZone: use1

replicas: 50

- availabilityZone: use2

replicas: 50

- availabilityZone: use3

replicas: 50

nodeTemplate:

metadata:

labels:

datastrophic.io/gpu-type: nvidia-a100-80gb # Custom label for GPU targeting.

capacity:

cpu: 96

memory: 1360Gi

ephemeralStorage: 3Ti

nvidia.com/gpu: 8

Scheduling and monitoring of workloads #

A typical scheduling experimentation workflow consists of a cluster bootstrap, scheduling and monitoring of the workloads, iteration on the cluster or workload configuration, and tearing down the cluster once experiments are complete.

To demonstrate how KEMU helps with the experimentation, we will create a 2,500 node cluster with A100, H100, and H200 Nvidia GPUs distributed across 3 availability zones (cluster specification is available in kemu/examples/gcp-large.yaml in GitHub). Once the cluster is up and running, we will create a number of jobs to saturate the cluster, observe the allocation, and modify scheduling constraints to understand the impact they have on scheduling efficiency.

To create a cluster and explore it using Prometheus stack, follow these steps:

# Create KEMU cluster:

kemu create-cluster --name gcp-large --kubeconfig $(pwd)/kemu.config --cluster-config https://raw.githubusercontent.com/datastrophic/kemu/refs/tags/v0.1.0/examples/gcp-large.yaml

# Retrieve Grafana password:

kubectl --kubeconfig $(pwd)/kemu.config get secret prometheus-grafana -n monitoring -o jsonpath='{.data.admin-password}' | base64 -d

# Expose Grafana port on the localhost:

kubectl --kubeconfig $(pwd)/kemu.config port-forward --namespace monitoring svc/prometheus-grafana 8080:80

After the cluster bootstrap is complete and port forwarding is enabled, a Grafana instance will become available at

http://localhost:8080/ and will require authentication with username admin

and the retrieved password. Navigate to the pre-installed

cluster overview dashboard for

cluster-level information about cluster composition, available and allocated capacity, and their distribution

across availability zones and device types:

Optimal scheduling example #

Let’s create some synthetic Jobs that require different GPU types and observe the cluster saturation.

The number of jobs, their parallelism, and resource requirements are designed to demonstrate

100% GPU allocation (2,550 pods × 8 GPUs = 20,400 GPUs allocated out of 20,400 available).

Below is an example job manifest for a distributed A100 job with 100 workers. Note the annotation

pod-complete.stage.kwok.x-k8s.io/delay: "10m" that instructs KWOK for how long it should maintain pods in

running state before marking them completed. Note, there’s no scheduling constraints specified for this

job beyond node affinity and tolerations to land its pods on KWOK nodes with a particular GPU type.

apiVersion: batch/v1

kind: Job

metadata:

generateName: job-a100-no-az-affinity-

spec:

completions: 100

parallelism: 100

template:

metadata:

annotations:

pod-complete.stage.kwok.x-k8s.io/delay: "10m"

spec:

restartPolicy: Never

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: datastrophic.io/gpu-type

operator: In

values: ["nvidia-a100-80gb"]

- key: type

operator: In

values: ["kwok"]

tolerations:

- effect: "NoSchedule"

key: "kwok.x-k8s.io/node"

value: "fake"

containers:

- name: fake-container

image: fake-image

resources:

requests:

cpu: 180

memory: 1100Gi

nvidia.com/gpu: 8

limits:

cpu: 180

memory: 1100Gi

nvidia.com/gpu: 8

For convenience, we will be using workload specs from kemu/examples/workloads and will create 15 100-worker A100, 15 50-worker H100, and 12 25-worker H200 jobs.

# Note: Pods will run for 10 minutes before completing (configured via KWOK annotation).

for i in {1..15}; do

kubectl --kubeconfig $(pwd)/kemu.config create -f https://raw.githubusercontent.com/datastrophic/kemu/refs/tags/v0.1.0/examples/workloads/job-a100-no-affinity-100-workers.yaml

done

for i in {1..15}; do

kubectl --kubeconfig $(pwd)/kemu.config create -f https://raw.githubusercontent.com/datastrophic/kemu/refs/tags/v0.1.0/examples/workloads/job-h100-no-affinity-50-workers.yaml

done

for i in {1..12}; do

kubectl --kubeconfig $(pwd)/kemu.config create -f https://raw.githubusercontent.com/datastrophic/kemu/refs/tags/v0.1.0/examples/workloads/job-h200-no-affinity-25-workers.yaml

done

Once the jobs are created, navigate to Grafana dashboard

to observe cluster saturation and workload status:

As we can see, all jobs ran in parallel, resulting in 2,550 running pods distributed evenly across 3 availability zones. No pods were stuck in pending state, and overall cluster GPU allocation achieved 100%. This level of cluster allocation is rarely achieved in production environments, where scheduling constraints like availability zone affinity, anti-affinity rules, and resource fragmentation typically reduce utilization. The following section demonstrates the impact of adding just one common constraint.

Suboptimal scheduling example #

Availability zone affinity is a common requirement for distributed batch jobs

for achieving better network performance and avoiding cross-AZ traffic costs

overhead. We will apply the following podAffinity rule to jobs from the

previous example and repeat the experiment:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: "datastrophic.io/workload"

operator: In

values:

- "JOB_ID"

For convenience, workload templates are provided in kemu/examples/workloads.

# Note: Pods will run for 10 minutes before completing (configured via KWOK annotation).

for i in {1..15}; do

curl -s https://raw.githubusercontent.com/datastrophic/kemu/refs/tags/v0.1.0/examples/workloads/job-a100-az-affinity-100-workers.tmpl | \

sed "s/JOB_ID/a100-job-with-affinity-${i}/g" | \

kubectl --kubeconfig $(pwd)/kemu.config create -f -

done

for i in {1..15}; do

curl -s https://raw.githubusercontent.com/datastrophic/kemu/refs/tags/v0.1.0/examples/workloads/job-h100-az-affinity-50-workers.tmpl | \

sed "s/JOB_ID/h100-job-with-affinity-${i}/g" | \

kubectl --kubeconfig $(pwd)/kemu.config create -f -

done

for i in {1..12}; do

curl -s https://raw.githubusercontent.com/datastrophic/kemu/refs/tags/v0.1.0/examples/workloads/job-h200-az-affinity-25-workers.tmpl | \

sed "s/JOB_ID/h200-job-with-affinity-${i}/g" | \

kubectl --kubeconfig $(pwd)/kemu.config create -f -

done

Once the jobs are created, navigate to Grafana dashboard

to observe cluster saturation and workload status:

From the dashboard, we can see that while all jobs are in the Running state, there are pods

in the Pending state while unallocated capacity remains available.

What we’re observing is partial admission - a common scheduling problem in Kubernetes clusters. The Kubernetes Scheduler processes pods individually without job-level awareness. Pods created by jobs are not scheduled in batch but rather one-by-one resulting in fragmentation and inability to schedule all pods even if there is cluster capacity available.

For distributed training workloads that require all workers to start simultaneously (all-or-nothing semantics), this partial admission creates a deadlock situation. This simple experiment reveals a scheduling issue that would be expensive to discover in production. With KEMU, you can:

- Test different node distributions across availability zones;

- Experiment with gang scheduling solutions (Volcano, YuniKorn);

- Measure the impact of scheduling policies on cluster allocation and utilization;

- Validate scheduler behavior under different constraint scenarios.

To iterate on solutions, you could modify the cluster specification to adjust

zone distribution, install a gang scheduler addon, or test different scheduling

policies—all without touching production infrastructure. When you’re done

experimenting, clean up with kemu delete-cluster.

Conclusion #

Emulated clusters provide safe environments for testing scheduling optimizations at scale without imposing risks on production infrastructure. Existing solutions such as Kind and KWOK are battle tested and widely used by the community for solving this problem.

However, the end-to-end cluster bootstrap using the existing technologies suffers from the proliferation of tools, fragmented configuration, the lack of native support for cluster addons, and the multi-step process required for configuring the desired cluster shape.

KEMU addresses these problems with a single opinionated utility driven by declarative configuration. Once defined, an emulated cluster can be reliably recreated by a human or CI system in minutes without custom scripting or extensive templating. Changing cluster shape, size, capacity, or pre-installed software only requires updating the cluster specification.

KEMU eliminates the complexity of emulated cluster management, freeing you from maintaining boilerplate scripts and configuration files so you can focus on experimentation and rapid iteration. Head over to the KEMU GitHub repository to get started. We’d love your feedback and contributions!

References #

- Scale Your Batch / Big Data / AI Workloads Beyond the Kubernetes Scheduler - a KubeCon talk that brought original inspiration for my interest in automation of the emulated clusters.

- Kubernetes E2E Framework is an amazing work of the community in the Kubernetes testing space. KEMU relies on its API for Kind cluster management.

- Go Helm Client is used in KEMU for managing Helm Chart installations.

- kind and KWOK are well-known tools for cluster emulation KEMU relies on.